Comparing multiple-choice and open-ended items in listening tests: implications for testers and teachers

At this year’s Future of English Language Teaching (FoELT) conference, Andrew Fleck explored how different item types in listening tests engage learners’ listening processes and influence the way English is both tested and taught.

Andrew has over two decades of experience teaching EFL and EAP in Europe, South Korea and Britain. For the past six years he has been writing, reviewing and, more recently, managing test content for Trinity College London. In 2023, he completed an MA in Language Testing at Lancaster University; his dissertation on task types in listening tests was highly commended by the Caroline Clapham IELTS Masters Award committee.

Why item type matters

Andrew began by explaining that test item type is not just a technical choice but one that shapes how learners listen and how teachers teach. Test developers need to understand how item formats affect difficulty, discrimination and validity, while teachers want to know how those same formats influence learners’ listening strategies in the classroom.

By comparing multiple-choice and open-ended (short answer) items, Andrew’s research aimed to show how each type engages listening processes differently and what implications this might have for test design and teaching.

Listening processes in focus

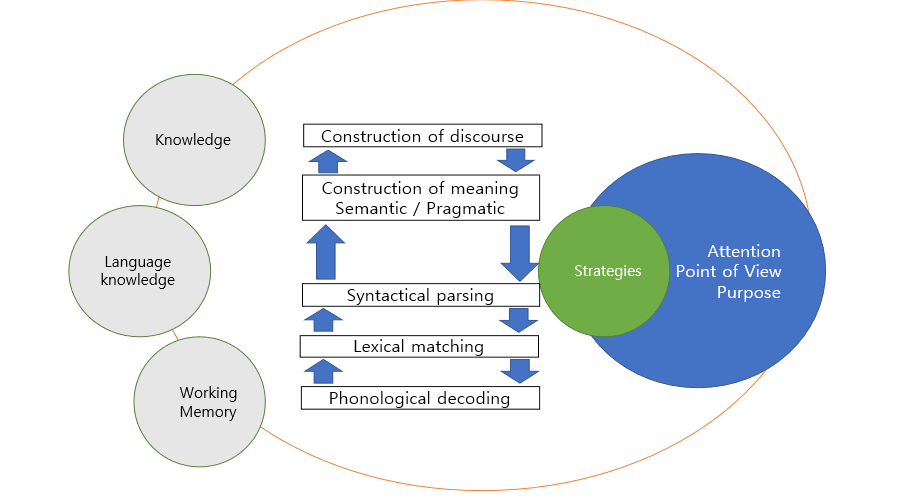

Drawing on various sources within the language testing literature, Andrew outlined how second language listeners process spoken input, distinguishing between lower-level processes, such as decoding sounds and recognising words, and higher-level processes, such as constructing meaning and identifying relationships between ideas. The below diagram, drawing on models by Michael Rost, John Field and Larry Vandergrift and Christine Goh, summarises the various cognitive faculties involved in listening, with the ‘processes’ at the centre.

He noted that effective listening tests should engage both types of processes to mirror how real-world listening occurs, and follows John Field in arguing that higher level learners should be directed more towards construction of meaning. The choice of question format can play a key role in doing this.

The research design

Andrew’s study reworked an existing set of multiple-choice items into short-answer items. Both versions were administered to 60 learners on an English for Academic Purposes (EAP) programme at a UK university. Participants then rated their level of comprehension for each recording, from not understanding, to partially, mostly and fully understanding the input. They also identified their approach to answering each item.

This provided data not only on comparative test scores but also on the strategies learners used and the degree of understanding they felt they had of the listening input.

Key findings

The findings revealed clear differences between the two formats:

· In the item development stage it was harder to rework multiple-choice questions aimed at higher-level listening processes into short-answer items.

· Multiple-choice items were generally easier and produced higher average scores than the open-ended equivalents, though the effect was unpredictable.

· Short-answer items tended to be more difficult, and more discriminating.

· Multiple-choice items were more forgiving on candidates who ‘mostly understood’ what they were listening to, while shorter-answer items tended only to be answered by those who ‘fully understood’.

· Test takers tended to report lower comprehension of audio input when they were answering open-ended questions.

· Successful candidates did not rely on discrete strategies, rather their natural understanding of the audio.

These results highlight how item type affects not only performance but also the kinds of listening processes that are activated.

Implications for test design and teaching

For test developers, Andrew noted that the choice between multiple-choice and short-answer items should be guided by the construct being measured and the other needs of the test. Where the goal is to assess a range of subskills, from identifying key information to making inferences and evaluation, multiple-choice questions are useful. Open ended short-answer questions can be useful to test understanding of precise information and can help to distinguish the very strongest candidates.

While teaching is not the same thing as testing, a cognizance of the processes involved in listening, can help teachers to analyse where learners’ comprehension is falling down. Unlike testers, teachers can get to the root of a comprehension error and analyse exactly what a learner misunderstood and what to learn to fix this – an analyse-and-repair approach.

Broader reflections

Andrew’s presentation highlighted the usefulness for both testers and teachers of awareness of the cognitive processes underlying listening. He also noted that more research into how item types interact with proficiency levels could help refine test design further, ensuring fairness and authenticity across contexts.

Key takeaways

| Theme | Key idea |

| Listening test design | Item type influences both the difficulty and validity of listening assessments. |

|

Cognitive processes |

Multiple-choice and open-ended items engage different listening skills and strategies. |

| Learner experience | Multiple-choice items can help facilitate a focus on meaning, rather than decoding of words and grammar. |

|

Classroom implications |

An awareness of listening processes can assist an analyse-and-repair approach to teaching. |

| Construct validity | Choosing the right item type depends on what the test aims to measure. |

Final thought

Andrew’s research shows that how we assess listening directly affects how it is taught and learned. By understanding the impact of different item types, both testers and teachers can make informed decisions that support more authentic, balanced and meaningful approaches to listening development.